Computer components aren't typically thought of as transforming entire businesses or industries, but NVIDIA's 2023 release of graphics processing units did just that. The H100 data center chip increased Nvidia's value by more than $1 trillion, turning the company into his AI kingmaker overnight. This showed investors that the buzz around generative artificial intelligence is leading to real profits, at least for his Nvidia and its most important suppliers.

Demand for the H100 is so high that some customers have to wait as long as six months to receive it.

advertisement

Continue reading below

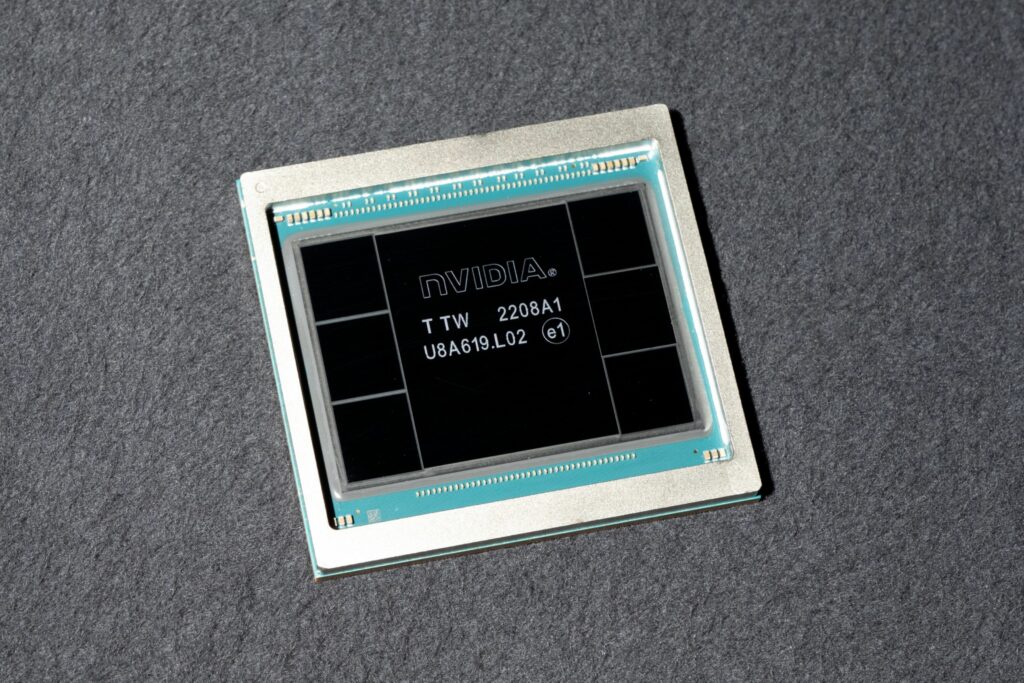

1. What is Nvidia's H100 chip?

The H100, named after computer science pioneer Grace Hopper, is a graphics processor. This is an enhanced version of the type of chip typically included in PCs and helps gamers get the most realistic visual experience. However, it is optimized to process huge amounts of data and computations at high speeds, making it ideal for the power-intensive task of training AI models. His Nvidia, founded in 1993, has made investments dating back nearly 20 years, betting that the ability to perform work in parallel would one day make its chips valuable in applications beyond gaming. We have developed this market.

2. Why is H100 special?

Generative AI platforms learn how to complete tasks such as translating text, summarizing reports, and compositing images by training on vast books of existing material. The more you watch, the better you'll get at recognizing human speech and writing cover letters for jobs. They develop through trial and error, attempting billions of times to achieve proficiency, consuming vast amounts of computing power in the process. Nvidia says the H100 is four times faster at training so-called large-scale language models (LLMs) and 30 times faster at responding to user prompts than its previous generation chip, the A100. That performance advantage can be critical for companies rushing to train LLMs to perform new tasks.

3. How did Nvidia become a leader in AI?

The Santa Clara, California, company is a world leader in graphics chips, the part of a computer that generates the images you see on your screen. The most powerful of them are built with hundreds of processing cores that run multiple computational threads simultaneously and model complex physics such as shadows and reflections. Nvidia engineers realized in the early 2000s that by splitting tasks into smaller chunks and working on them simultaneously, they could recalibrate their graphics accelerators for other applications. Just over a decade ago, AI researchers discovered that this type of chip could finally put their research into practice.

4. Does Nvidia have any real competitors?

Nvidia controls about 80% of the market for AI data center accelerators operated by Amazon.com's AWS, Alphabet's Google Cloud and Microsoft's Azure. These companies' internal efforts to build their own chips or competing products from chipmakers like Advanced Micro Devices and Intel have not had much of an impact on the AI accelerator market so far.

5. How can Nvidia stay ahead of its competitors?

Nvidia has been rapidly updating its products, including the software that supports its hardware, at a pace that no other company can match. The company has also devised various cluster systems that allow customers to purchase his H100 in bulk and quickly deploy it. Chips like Intel's Xeon processors are capable of more complex data processing, but they have fewer cores and take much longer to process the large amounts of information typically used to train AI software. Nvidia's data center division posted revenue of $22 billion in the final quarter of 2023, an 81% increase.

advertisement

Continue reading below

6. How do AMD and Intel compare to Nvidia?

AMD, the second-largest maker of computer graphics chips, announced in June a version of its Instinct series targeted at a market dominated by Nvidia products. AMD CEO Lisa Su told an audience at an event in San Francisco that the chip, called MI300X, will have more memory to handle generative AI workloads. He said he is doing so. “We're still in the very early stages of the AI lifecycle,” she said in December. Intel has brought specific chips to market for AI workloads, but right now demand for data center graphics chips is growing faster than for processor units, which has traditionally been the company's strength. Admitted. Nvidia's advantage goes beyond hardware performance. The company invented something he called CUDA, which is a graphics language for his chips. This allows graphics chips to be programmed for the types of work that underpin AI programs.

7. What will Nvidia release next?

Later this year, the H100 will be succeeded by its successor, the H200, after which Nvidia will release the B100 model with even more significant changes to the design. CEO Jensen Huang has acted as an ambassador for the technology, trying to get private companies as well as governments to buy it early or risk being left behind by AI adopters. . Nvidia also knows that if customers choose its technology for generative AI projects, it will have a much easier time selling upgrades than competitors trying to lure users away.

© 2024 Bloomberg