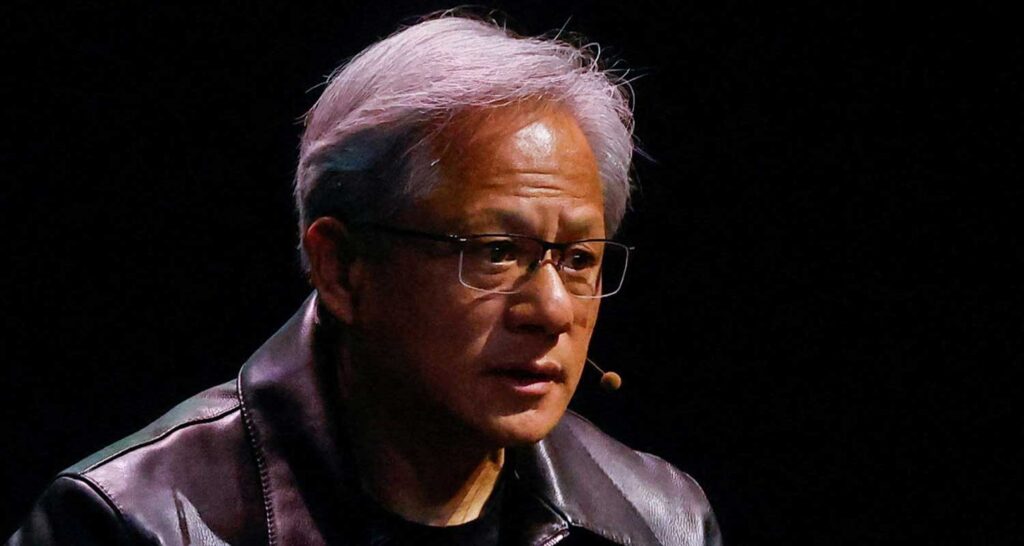

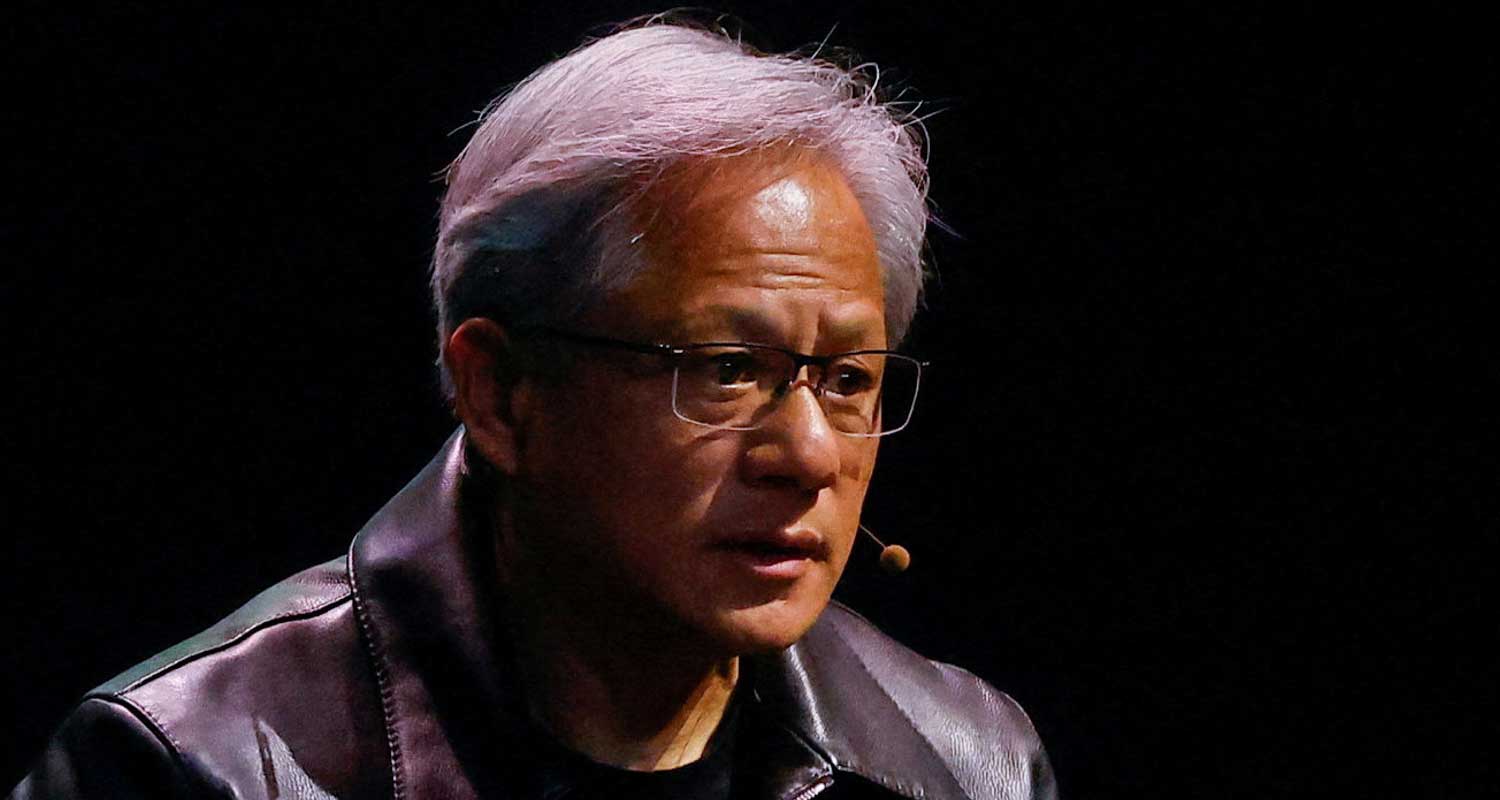

NVIDIA CEO Jensen Huang says artificial general intelligence could arrive in as little as five years, depending on the definition.

Huang, the head of one of the world's leading manufacturers of AI chips used to build systems such as OpenAI's ChatGPT, spoke at an economic forum at Stanford University to address one of Silicon Valley's long-standing goals: artificial intelligence. He was answering a question about how long it would take to develop it. A computer that can think like a human.

Huang said the answer largely depends on how you define your goals. If the definition is the ability to pass human tests, then artificial general intelligence (AGI) will soon be here, Huang said.

“If I gave an AI… every test you can imagine, I would create a list of those tests and put it in front of the computer science industry, and in five years it would be successful. The company closed Friday with a market capitalization of more than $2 trillion, Huang said.

Currently, AI can pass exams such as the bar exam, but it still struggles with specialized medical exams such as gastroenterology. But Huang said it should be possible to pass one of them within five years.

But according to other definitions, AGI may be a long way off because scientists still don't agree on how to explain how the human mind works, Huang said. Stated. Engineers need clear goals, “so it's difficult to achieve as an engineer,” Huang said.

fab

Huang also addressed the question of how many more chip factories, known in the industry as “fabs,” are needed to support the expansion of the AI industry. According to media reports, OpenAI CEO Sam Altman believes more fabs are needed.

Huang said more chips will be needed, but the performance of each chip will also improve over time, limiting the number of chips needed.

Read: Nvidia H100: Introducing the chip that's driving investor excitement

“We're going to need more fabs. But remember, we're also significantly improving our algorithms and AI processing over time,” Huang said. “There is such a demand because the efficiency of computing is not what it is today. I think in 10 years computing has improved a million times.” (c) 2024 Reuters